Voice+Tactile

Research

ACM CHI 2020 [Go to Publication URL]

#Tactile Interface #In-car User Experience #Quantitative & Qualitative User Research

Promisingly, driving is adapting to a Voice User Interface (VUI) that lets drivers utilize diverse applications with little effort. However, the VUI has innate usability issues, such as a turn-taking problem, a short-term memory workload, in efficient controls, and difficulty correcting errors.

To over come these weaknesses, I and researchers in KAIST HCI lab (School of Computing) explored supplementing the VUI with tactile interaction for in-car VUI user experience.

As an early result, we present the Voice+Tactile interactions that augment the VUI via multi-touch inputs and high-resolution tactile outputs. We designed various Voice+Tactile interactions to support different VUI in teraction stages and derived four Voice+Tactile interaction themes: Status Feedback, Input Adjustment, Output Control, and Finger Feedforward.

Research

ACM CHI 2020 [Go to Publication URL]

#Tactile Interface #In-car User Experience #Quantitative & Qualitative User Research

Promisingly, driving is adapting to a Voice User Interface (VUI) that lets drivers utilize diverse applications with little effort. However, the VUI has innate usability issues, such as a turn-taking problem, a short-term memory workload, in efficient controls, and difficulty correcting errors.

To over come these weaknesses, I and researchers in KAIST HCI lab (School of Computing) explored supplementing the VUI with tactile interaction for in-car VUI user experience.

As an early result, we present the Voice+Tactile interactions that augment the VUI via multi-touch inputs and high-resolution tactile outputs. We designed various Voice+Tactile interactions to support different VUI in teraction stages and derived four Voice+Tactile interaction themes: Status Feedback, Input Adjustment, Output Control, and Finger Feedforward.

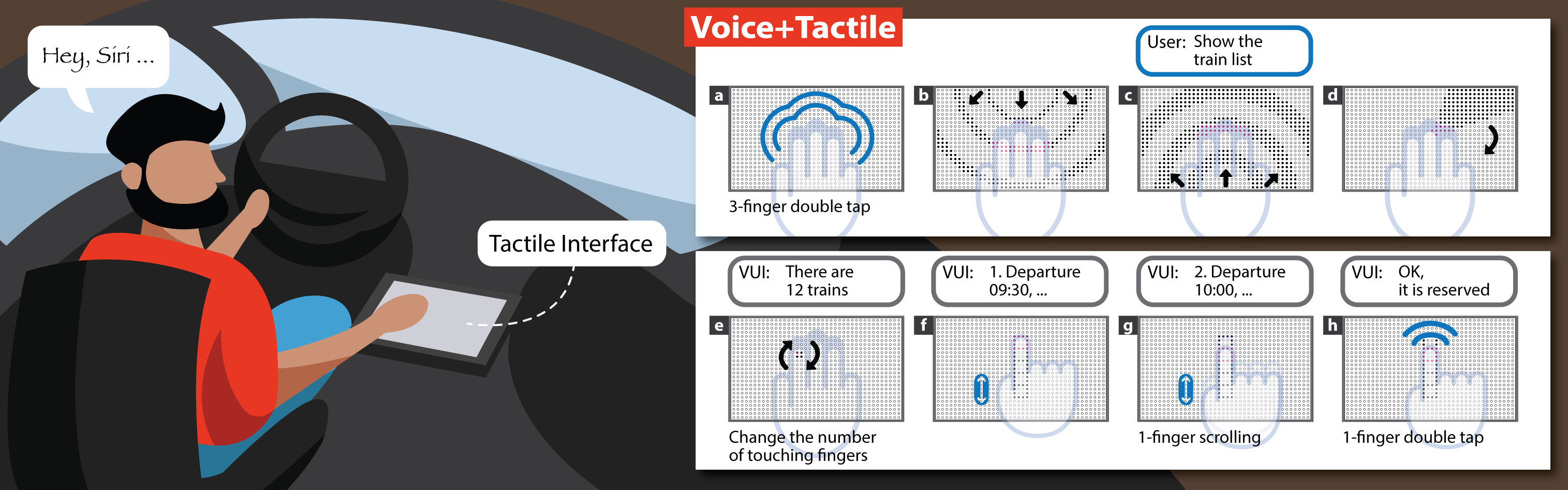

(a) A user can invoke the interface via a 3-finger double-tap. (b) In a listening state, concentric patterns indicate the interface is attending. (c) Inverted concentric patterns indicate input speech is detected. (d) A rotating fan-shape pattern indicates processing. (e) A feedforward indicates the finger used in the next step. (f-g) The user can scroll through speech output on a ladder pattern. (h) The user selects an item by double-tapping one finger.

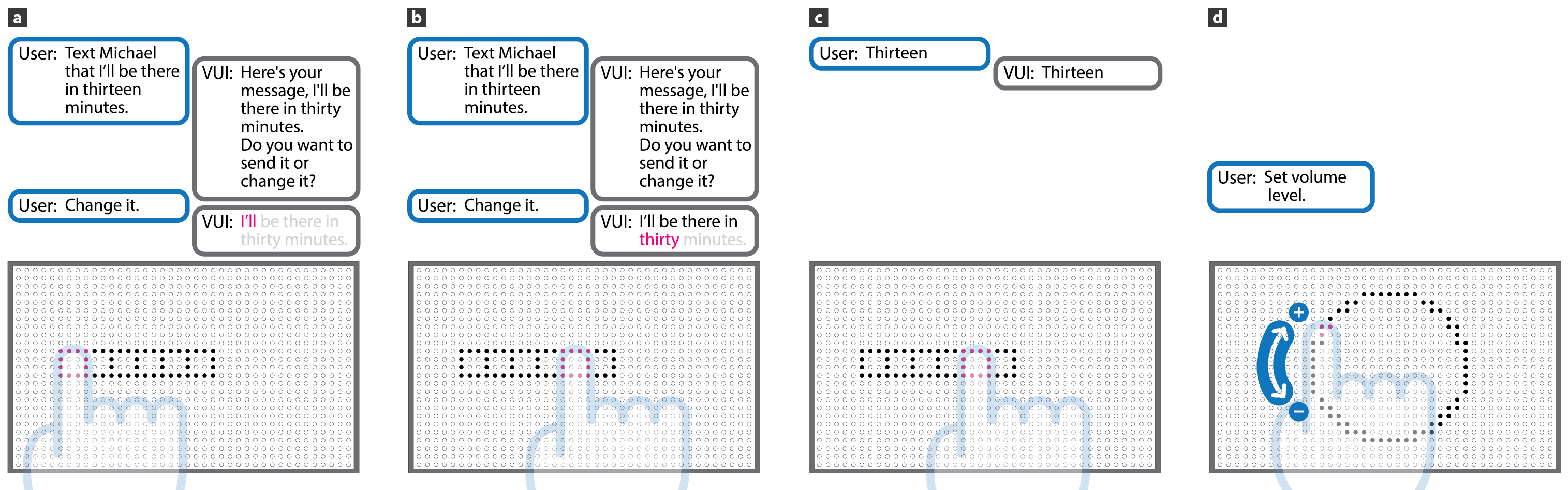

(a) A user can invoke the interface via a 3-finger double-tap. (b) In a listening state, concentric patterns indicate the interface is attending. (c) Inverted concentric patterns indicate input speech is detected. (d) A rotating fan-shape pattern indicates processing. (e) A feedforward indicates the finger used in the next step. (f-g) The user can scroll through speech output on a ladder pattern. (h) The user selects an item by double-tapping one finger. The Input Adjustment interactions: Fine-Tuning interaction (a) and Text Edit interaction (b-d). (a) A jog dial tactile pattern appears at the finger position. The user can fine-tune the music volume or light brightness by moving the finger clockwise or counter-clockwise. (b) A horizontal ladder-like tactile pattern for reviewing and editing a voice input is shown. The user can review commands at a word-level via the pattern. (c) The user can locate a misrecognized word by hearing each word. (d) The user can edit a wrong word by saying the correct word.

The Input Adjustment interactions: Fine-Tuning interaction (a) and Text Edit interaction (b-d). (a) A jog dial tactile pattern appears at the finger position. The user can fine-tune the music volume or light brightness by moving the finger clockwise or counter-clockwise. (b) A horizontal ladder-like tactile pattern for reviewing and editing a voice input is shown. The user can review commands at a word-level via the pattern. (c) The user can locate a misrecognized word by hearing each word. (d) The user can edit a wrong word by saying the correct word. A user study showed that the Voice+Tactile interactions improved the VUI efficiency and its user experiences without incurring significant additional distraction overhead on driving.

We hope these early results open new research questions to improve in-vehicle VUI with a tactile channel.

We hope these early results open new research questions to improve in-vehicle VUI with a tactile channel.